chatgpt

Track Time in GPT with Code Interpreter

creating a "loading bar"!

conversations from the future -- today!

chatgpt

creating a "loading bar"!

chatgpt

New UX patterns are being discovered every day!

chatgpt

quick tip!

chatgpt

a geo guessr like game with dalle, code interpreter and chatgpt

instabram

Where do you see yourself in four months?

chatgpt

do large language models create noise on the internet or do they help us become more critical thinkers?

chatgpt

From 2.5 million years ago to the agricultural revolution, can GPT write an accurate timeline for human history? Let's test out its abilities and discover the power of asking the right questions

chatgpt

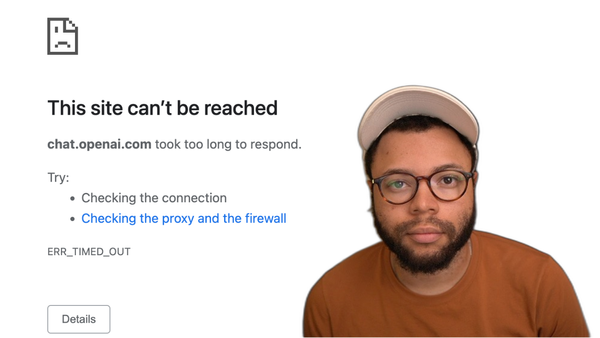

What do you do when your internet is offline but you still want to use GPT models? In this video, I will give you tips and tricks on how to game with GPT without actually being to use GPT.

chatgpt

Are you tired of feeling drained and unproductive? What if an AI-powered app could help you manage your time and energy to boost your productivity levels? In this video, we explore the potential of integrating popular time management frameworks with energy management, all with the help of GPT.

chatgpt

Discussing a four-state framework for working with generative pre-trained models, including kindling, escalating, verifying, and goal states to try to get the best results possible.

chatgpt

Using GPT 3.5 and GPT 4, we experiment with different approaches to keep the essence of a long and poetic quote intact

chatgpt

Struggling to fit exercise and meditation into your schedule? Me too! Let's put GPT to the task to discover how an NP-hard computer science problem called the scheduling problem can help us!